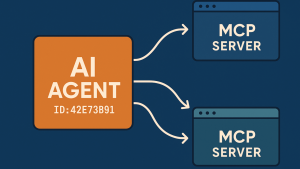

As AI agents—autonomous software entities capable of performing tasks, making decisions, and interacting with systems or humans—become increasingly integral to economic and social ecosystems, the need to uniquely identify and regulate them grows.

As AI agents—autonomous software entities capable of performing tasks, making decisions, and interacting with systems or humans—become increasingly integral to economic and social ecosystems, the need to uniquely identify and regulate them grows.

Legal Entity Identifiers (LEIs), standardized 20-character alphanumeric codes under the ISO 17442 standard, are traditionally used to identify legal entities like companies or government organizations in financial transactions.

Extending LEIs to AI agents within the context of a Digital Public Infrastructure (DPI) framework presents a novel approach to ensuring transparency, accountability, and trust in their operations.

This exploration examines how AI agents may come to have LEIs, focusing on the motivations, technical implementation, governance considerations, and challenges, leveraging open-source platforms and blockchain technology to align with DPI principles of inclusivity, interoperability, and public good.

Agent ID

Writing on Linkedin George Fletcher explores the idea that since AI agents will be acting to complete transactions they merit a digital identity beyond that of simple technical authentication; in short a Legal Identifier.

The motivation for assigning LEIs to AI agents stems from their expanding role in financial, legal, and operational contexts.

AI agents are increasingly deployed in areas like algorithmic trading, supply chain management, smart contracts, and decentralized autonomous organizations (DAOs), where they act as proxies for organizations or individuals. Without unique identifiers, tracking their actions, ensuring compliance with regulations (e.g., anti-money laundering or sanctions screening), or attributing responsibility for their decisions becomes challenging.

LEI’s for Agents

Assigning LEIs to AI agents could provide a standardized way to register and verify them, linking their activities to responsible legal entities (e.g., the organization deploying the agent) while enhancing transparency in digital ecosystems.

For instance, in cross-border payments, an AI agent executing transactions could carry an LEI to clarify its origin and authority, aligning with standards like ISO 20022. Similarly, in KYC processes, LEIs could help verify the legitimacy of AI-driven services, reducing fraud risks.

Technically, implementing LEIs for AI agents within a DPI framework can leverage open-source platforms and blockchain technology to ensure scalability and trust. A permissioned blockchain, such as Hyperledger Fabric or Corda, could serve as a decentralized registry for issuing and managing AI agent LEIs.

Each AI agent could be assigned an LEI tied to its deploying entity, encoded as a smart contract containing Level 1 data (e.g., agent name, associated legal entity, purpose) and potentially Level 2 data (e.g., hierarchical relationships to parent organizations or other agents).

Global Legal Entity Identifier Foundation (GLEIF)

The Global Legal Entity Identifier Foundation’s (GLEIF) open-source tools, such as the Lenu Python library for assigning Entity Legal Form codes, could be adapted to classify AI agents under a new category, reflecting their non-human status.

The verifiable LEI (vLEI) framework, using the Key Event Receipt Infrastructure (KERI) protocol, could enable AI agents to carry cryptographically secure identifiers, allowing real-time verification via GLEIF’s LEI Search API. Open-source databases like PostgreSQL could store agent metadata, while tools like OpenAPI and OpenSSL ensure interoperability with DPI components, such as digital wallets or payment systems.

Governance is a critical consideration in assigning LEIs to AI agents. GLEIF’s existing framework, which oversees LEI issuance through accredited Local Operating Units (LOUs), could be extended to include AI agents under a modified governance model.

This would require defining AI agents as a distinct type of entity within the ISO 17442 standard, potentially classifying them as “non-legal entities” with ties to a legal entity (e.g., a corporation or DAO). Collaboration between regulators, GLEIF, and open-source communities would be essential to establish policies for registration, data quality, and renewal processes.

For example, an AI agent’s LEI could require annual validation to ensure its operational status and compliance with evolving regulations. Blockchain-based governance, using decentralized identifiers (DIDs) and smart contracts, could automate oversight while maintaining transparency, aligning with DPI’s open data principles, such as those in the International Open Data Charter.

Several use cases illustrate the potential of LEIs for AI agents. In financial markets, AI agents executing high-frequency trades could carry LEIs to comply with regulations like MiFID II or Dodd-Frank, enabling regulators to trace activities to responsible entities.

In supply chains, AI agents managing logistics or verifying intellectual property (e.g., via EBSI-ELSA) could use LEIs to establish trust in paperless trade. For DAOs, where AI agents often execute governance decisions, LEIs could clarify the DAO’s legal structure and operational authority.

In digital identity systems, vLEIs could secure AI agents within digital wallets, such as the EU Digital Identity Wallet, ensuring authenticated interactions. These applications highlight how LEIs could integrate AI agents into DPI, fostering trust and reducing risks like fraud or unaccountable automation.

Challenges

However, significant challenges must be addressed. First, defining the legal status of AI agents is complex, as they lack traditional legal personhood. A new LEI category or standard modification may be needed, requiring consensus among global regulators and GLEIF.

Second, ensuring data quality and preventing misuse (e.g., duplicative or fraudulent LEIs) demands robust verification mechanisms, potentially using blockchain’s immutability and GLEIF’s challenge facility.

Third, adoption barriers, such as cost and technical complexity, could hinder implementation, particularly for smaller organizations or open-source developers. These can be mitigated by leveraging open-source platforms to reduce costs, automating registration via APIs, and advocating for regulatory mandates, such as requiring LEIs for AI-driven financial transactions.

Finally, ethical concerns, such as over-attributing autonomy to AI agents, necessitate clear policies linking agents to human or organizational accountability.

A practical implementation could involve a pilot within a DPI system, such as a blockchain-based registry for AI agents in a national payment or supply chain platform. Using Hyperledger Fabric, LOUs could issue LEIs to AI agents, storing data in PostgreSQL and linking to GLEIF’s Global LEI Index. Smart contracts could automate renewals and compliance checks, while vLEIs ensure secure authentication.

Conclusion

In conclusion, assigning LEIs to AI agents within a DPI framework is a forward-thinking approach to integrating these entities into digital ecosystems. By leveraging open-source blockchain platforms like Hyperledger, GLEIF’s tools, and KERI-based vLEIs, AI agents can be uniquely identified, verified, and governed, enhancing transparency and trust.

Overcoming challenges like legal definitions, data quality, and adoption requires collaboration among regulators, developers, and GLEIF, aligning with DPI’s public good ethos. As AI agents proliferate, LEIs could become a cornerstone of their regulation, ensuring accountability in an increasingly automated world.